The “techno-human condition” — the human inclination to use tools to mediate our environment — is natural, said Francis, but leaves us vulnerable

By Joseph Tulloch (Vatican News)

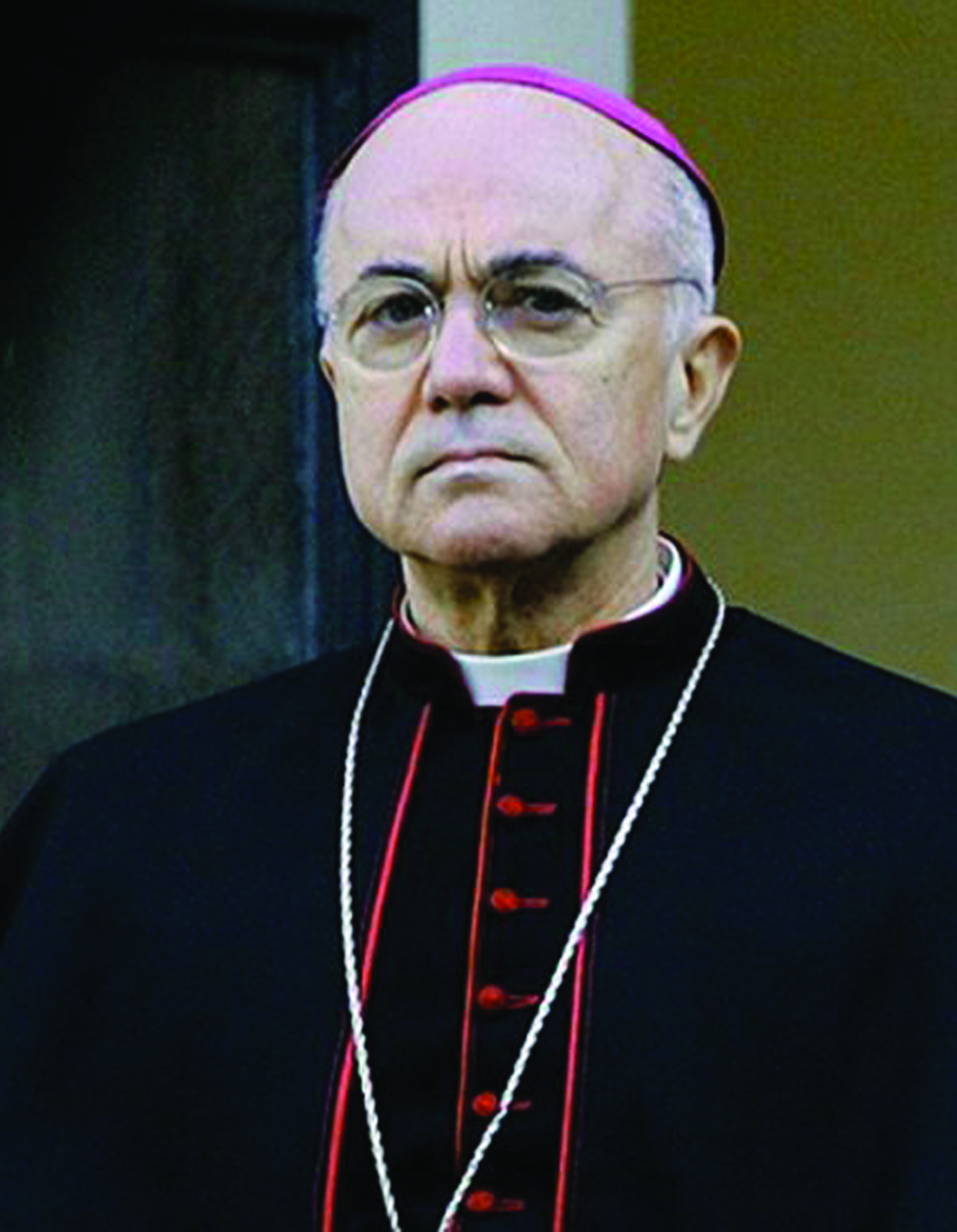

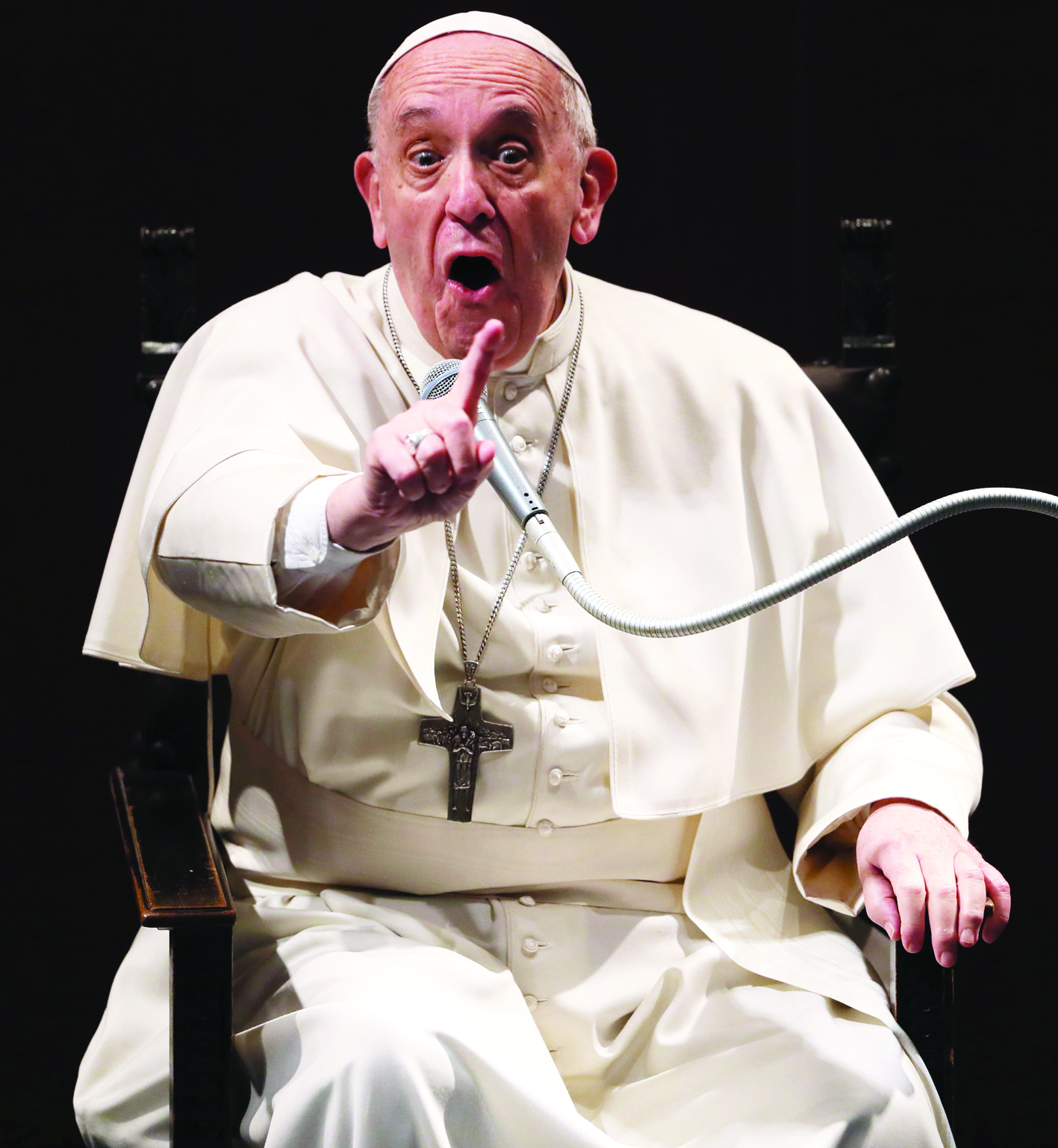

Pope Francis with some of the world’s most powerful political leaders in Italy on June 14 (VATICAN MEDIA/Ag.Romano Siciliani)

On the afternoon of Friday, June 14, Pope Francis addressed the G7 leaders’ summit in Puglia, Italy.

He is the first Pope to ever address the forum, which brings together the leaders of the US, UK, Italy, France, Canada, Germany, and Japan.

AI: Dangers and promises

The Pope dedicated his address to the G7 to the subject of artificial intelligence (AI).

Francis began by saying that the birth of AI represents “a true cognitive-industrial revolution” which will lead to “complex epochal transformations.” These transformations, the Pope said, have the potential to be both positive — for example, the “democratization of access to knowledge,” the “exponential advancement of scientific research,” and a reduction in “demanding and arduous work” — and negative — for instance, “greater injustice between advanced and developing nations or between dominant and oppressed social classes.”

The “techno-human condition”

Noting that AI is “above all a tool,” the Pope spoke of what he called the “techno-human condition.”

He explained that he was referring to the fact that humans’ relationship with the environment has always been mediated by the tools that they have produced.

Some, the Pope said, see this as a weakness, or a deficiency; however, he argued, it is in fact something positive. It stems, he said, from the fact that we are beings “inclined to what lies outside of us,” beings “radically open to the beyond.”

This openness, Pope Francis said, is both the root of our “techno-human condition” and the root of our openness to others and to God, as well as the root of our artistic and intellectual creativity.

Decision-making: humans vs. machines

The Pope then moved on to the subject of decision-making.

He said that AI is capable of making “algorithmic choices” — that is, “technical” choices “among several possibilities based either on well-defined criteria or on statistical inferences.”

Human beings, however, “not only choose, but in their hearts are capable of deciding.”

This is because, the Pope explained, they are capable of wisdom, of what the Ancient Greeks called phronesis (a type of intelligence concerned with practical action), and of listening to Sacred Scripture.

It is thus very important, the Pope stressed, that important decisions must “always be left to the human person.”

As an example of this principle, the Pope pointed to the development of lethal autonomous weapons — which can take human life with no human input — and said that they must ultimately be banned.

Algorithms ‘neither objective nor neutral’

The Pope also stressed that the algorithms used by artificial intelligence to arrive at choices are “neither objective nor neutral.”

He pointed to the algorithms designed to help judges in deciding whether to grant home-confinement to prison inmates.

These programs, he said, make a choice based on data such as the type of offense, behavior in prison, psychological assessment, and the prisoner’s ethnic origin, educational attainment, and credit rating. However, the Pope stressed, this is reductive: “human beings are always developing, and are capable of surprising us by their actions. This is something that a machine cannot take into account.”

A further problem, the Pope emphasized, is that algorithms “can only examine realities formalized in numerical terms:”

AI-generated essays

The Pope then turned to consider the fact that many students are increasingly relying on AI to help them with their studies, and in particular, with writing essays. It is easy to forget, the Pope said, that “strictly speaking, so-called generative artificial intelligence is not really ‘generative’” — it does not “develop new analyses or concepts” but rather “repeats those that it finds, giving them an appealing form.”

This, the Pope said, risks “undermining the educational process itself.”

Education, he emphasized, should offer the chance for “authentic reflection.”

But instead, it “runs the risk of being reduced to a repetition of notions, which will increasingly be evaluated as unobjectionable, simply because of their constant repetition.”

Towards an “algor-ethics”

Bringing his speech to a close, the Pope emphasized that AI is always shaped by “the worldview of those who invented and developed it.”

A particular concern in this regard, he said, is that today it is “increasingly difficult to find agreement on the major issues concerning social life” — there is less and less consensus, that is, regarding the philosophy that should be shaping artificial intelligence.

What is necessary, therefore, the Pope said, is the development of an “algor-ethics,” a series of “global and pluralistic” principles which are “capable of finding support from cultures, religions, international organizations and major corporations.”

“If we struggle to define a single set of global values,” the Pope said, we can at least “find shared principles with which to address and resolve dilemmas or conflicts regarding how to live.”

A necessary politics

Faced with this challenge, the Pope said, “political action is urgently needed.”

“Only a healthy politics, involving the most diverse sectors and skills,” the Pope stressed, is capable of dealing with the challenges and promises of artificial intelligence.

The goal, Pope Francis concluded, is not “stifling human creativity and its ideals of progress” but rather “directing that energy along new channels.”

“The basic mechanism of artificial intelligence”: A concrete example

An excerpt from Pope Francis’ address to the June 14, 2024 meeting of leaders of the G7 nations

I would like now briefly to address the complexity of artificial intelligence. Essentially, artificial intelligence is a tool designed for problem solving. It works by means of a logical chaining of algebraic operations, carried out on categories of data. These are then compared in order to discover correlations, thereby improving their statistical value. This takes place thanks to a process of self-learning, based on the search for further data and the self-modification of its calculation processes.

Artificial intelligence is designed in this way in order to solve specific problems. Yet, for those who use it, there is often an irresistible temptation to draw general, or even anthropological, deductions from the specific solutions it offers.

An important example of this is the use of programs designed to help judges in deciding whether to grant home-confinement to inmates serving a prison sentence. In this case, artificial intelligence is asked to predict the likelihood of a prisoner committing the same crime(s) again. It does so based on predetermined categories (type of offense, behavior in prison, psychological assessment, and others), thus allowing artificial intelligence to have access to categories of data relating to the prisoner’s private life (ethnic origin, educational attainment, credit rating, and others). The use of such a methodology — which sometimes risks de facto delegating to a machine the last word concerning a person’s future — may implicitly incorporate prejudices inherent in the categories of data used by artificial intelligence.

Being classified as part of a certain ethnic group, or simply having committed a minor offense years earlier (for example, not having paid a parking fine) will actually influence the decision as to whether or not to grant home-confinement. In reality, however, human beings are always developing, and are capable of surprising us by their actions. This is something that a machine cannot take into account.

It should also be noted that the use of applications similar to the one I have just mentioned will be used ever more frequently due to the fact that artificial intelligence programs will be increasingly equipped with the capacity to interact directly (chatbots) with human beings, holding conversations and establishing close relationships with them. These interactions may end up being, more often than not, pleasant and reassuring, since these artificial intelligence programs will be designed to learn to respond, in a personalized way, to the physical and psychological needs of human beings.

It is a frequent and serious mistake to forget that artificial intelligence is not another human being, and that it cannot propose general principles. This error stems either from the profound need of human beings to find a stable form of companionship, or from a subconscious assumption, namely the assumption that observations obtained by means of a calculating mechanism are endowed with the qualities of unquestionable certainty and unquestionable universality.

This assumption, however, is far-fetched, as can be seen by an examination of the inherent limitations of computation itself. Artificial intelligence uses algebraic operations that are carried out in a logical sequence (for example, if the value of X is greater than that of Y, multiply X by Y; otherwise divide X by Y). This method of calculation — the so-called “algorithm” — is neither objective nor neutral. Moreover, since it is based on algebra, it can only examine realities formalized in numerical terms.

Facebook Comments